How Healthline.com's Privacy Missteps Sparked a Lawsuit - And What Every Company Must Do to Avoid the Same Fate

By Ramyar Daneshgar

Security Engineer | USC Viterbi School of Engineering

Disclaimer: This article is for educational purposes only and does not constitute legal advice.

Introduction

In July 2025, the California Attorney General filed a complaint against Healthline Media, LLC for violating the California Consumer Privacy Act (CCPA) and the Unfair Competition Law (UCL). The complaint revealed that Healthline’s systems continued to share personal information with advertisers even after consumers opted out, failed to properly structure contracts with ad partners, and transmitted sensitive health-related information such as article titles referencing HIV, Crohn’s, or MS.

The central problem wasn’t simply technical. It was organizational: over-reliance on vendors, failure to test compliance controls, vague contracts, and misleading consumer interfaces.

The Violations

1. Broken Opt-Out Mechanisms

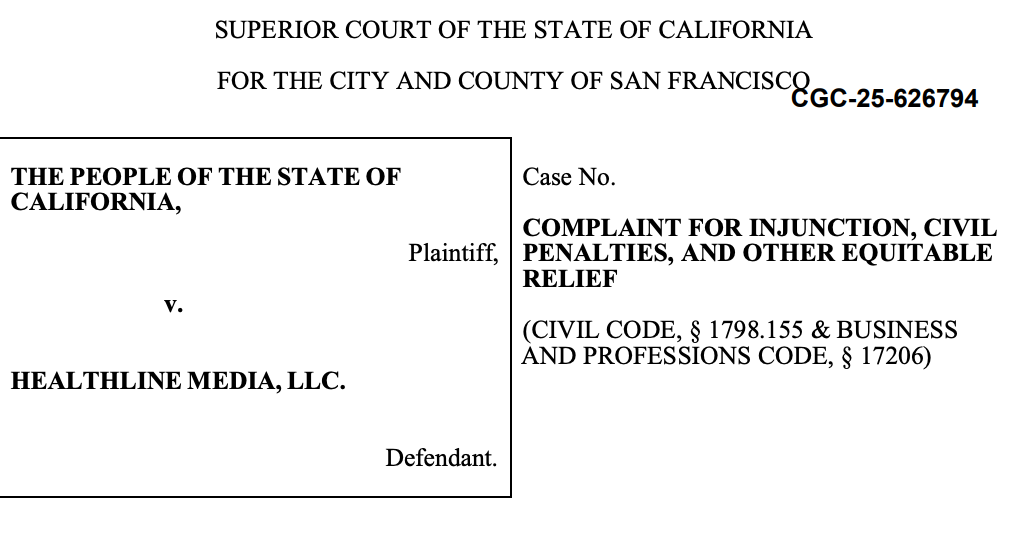

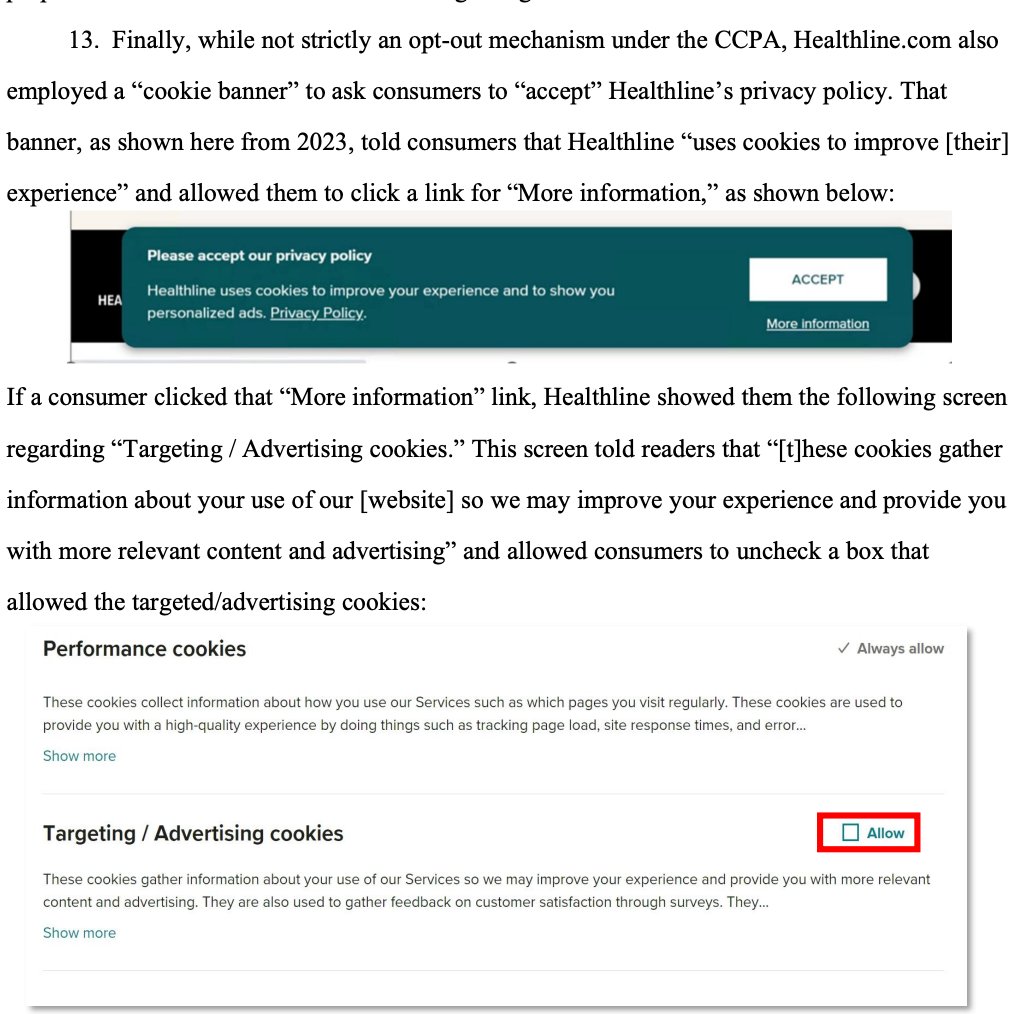

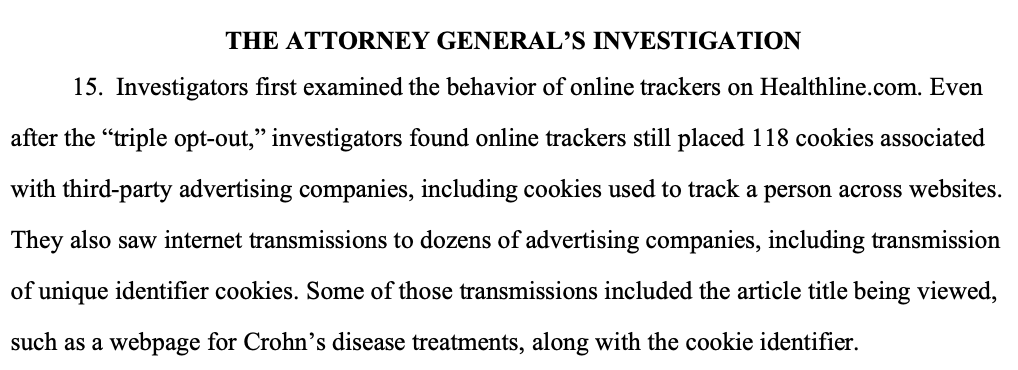

- Investigators tested Healthline’s opt-out form, cookie banner, and Global Privacy Control (GPC) - what they called a “triple opt-out.”

- Despite this, Healthline still set 118 cookies associated with third-party advertising companies and transmitted unique identifiers and full article titles to dozens of advertising companies.

- In short: consumers told the company not to share data, and the company ignored them.

Advice for Companies:

- Simulate consumer opt-outs internally: run automated tests daily to confirm no cookies, pixels, or identifiers are sent once an opt-out request is made.

- Don’t rely solely on vendors: Healthline blamed a privacy vendor for failing to block trackers. Regulators will hold your company responsible, not the vendor.

- Treat GPC as mandatory: the CCPA explicitly recognizes Global Privacy Control signals. Companies must configure websites to honor it.

2. Sharing Sensitive Health Information

- Healthline transmitted article titles suggesting medical diagnoses such as “Newly Diagnosed with HIV? Important Things to Know” to advertising partners.

- Regulators determined this violated the purpose limitation principle: businesses may only use data “for the purposes for which the [data] was collected” or for another purpose “compatible with the context”. Consumers reasonably expected to read health articles, not to have advertisers infer their conditions.

Advice for Companies:

- Strip sensitive data before transmission: never send article titles, URLs, or on-page text that can imply diagnoses, financial status, or other intimate details. Replace with general categories (“digestive health” instead of “Crohn’s disease”).

- Run data mapping exercises: identify every point where consumer data is collected, transmitted, or inferred. Confirm sensitive data is excluded from ad tech flows.

- Align with expectations: if consumers cannot reasonably foresee a use of their data, don’t assume a privacy policy disclosure makes it acceptable.

3. Weak Contracts with Advertising Partners

- Some contracts allowed vendors to use consumer data for “any business purpose” or “internal use” with no limits.

- Healthline also failed to ensure that recipients of opted-out consumer data were contractually bound to honor the U.S. Privacy String (a signal that tells partners the consumer opted out).

- Because of this, Healthline could not rely on the CCPA’s safe harbor provision, which protects businesses only if they have “no reason to believe” partners will misuse data.

Advice for Companies:

- Rewrite all advertising contracts: contracts must explicitly state the “limited and specified purposes” for which personal information may be used (Civ. Code § 1798.100(d)).

- Require compliance with privacy signals: contracts must obligate vendors to respect the U.S. Privacy String and GPC.

- Audit vendors against commitments: being a signatory to an industry framework is not enough. Regulators faulted Healthline for assuming compliance without verification.

- Ban broad-purpose clauses: terms like “any business purpose” or “internal use” invite violations. Replace with precise, CCPA-compliant purposes.

4. Misleading Cookie Banner

- Healthline’s cookie banner told consumers they could uncheck advertising cookies. Investigators found the cookies still fired regardless.

- Regulators categorized this as deceptive, since it gave consumers a false sense of control.

Advice for Companies:

- Truth in interfaces: only display controls that actually work. If cookies cannot be disabled, don’t offer the option.

- Test across environments: confirm that cookie banners behave consistently across browsers, devices, and sessions.

- Avoid dark patterns: do not design consent interfaces that mislead consumers or make opt-outs ineffective.

Root Causes Identified

The Attorney General’s complaint identified systemic weaknesses behind the violations:

- Misconfigured opt-out tools never tested properly.

- Blind reliance on vendors to block trackers.

- Assumptions about contracts instead of verifying compliance.

- Failure to recognize health data sensitivity.

- Consent banners that misrepresented reality.

For executives, this means privacy risks are rarely isolated - they span technology, contracts, vendor oversight, and consumer communication.

Business Risks

- Financial penalties: $2,663 per violation (CCPA), $7,988 per intentional violation, and $2,500 per violation under the UCL.

- Regulatory exposure: following Sephora, regulators are signaling a broader campaign against advertising-related data sharing.

- Consumer trust: mishandling health data risks irreparable reputational damage.

- Vendor liability: regulators will not accept “we thought our vendors handled it.”

Preventing Non-Recurrence: A Roadmap for Executives

Governance & Testing

- Establish quarterly privacy audits that simulate opt-out requests and confirm no trackers fire.

- Deploy automated monitoring tools that continuously scan for unauthorized cookies or pixels.

Data Handling

- Implement data minimization: never transmit full article titles or sensitive metadata downstream.

- Adopt category-level anonymization for ad tech integration.

Contracts & Vendors

- Mandate CCPA-compliant language in all contracts: “limited and specified purposes” only.

- Prohibit resale or broad-purpose processing.

- Require written commitments to honor U.S. Privacy Strings and GPC.

- Perform vendor due diligence: certifications, audits, and periodic compliance reviews.

Consumer Interfaces

- Ensure cookie banners and opt-out forms are functional and truthful.

- Test interfaces under multiple conditions.

- Eliminate misleading controls or “dark patterns.”

Culture & Oversight

- Form a privacy steering committee that includes legal, product, engineering, and marketing.

- Train all stakeholders that compliance is not just legal text - it’s operational practice.

- Create escalation protocols when systems fail, ensuring quick remediation.

Conclusion

The People v. Healthline Media case shows how quickly a privacy framework collapses if systems aren’t tested, contracts aren’t enforced, and vendors aren’t verified.

Executives must recognize that privacy compliance is not static. It requires:

- Continuous testing of opt-out mechanisms.

- Specific, enforceable contracts.

- Privacy by design for sensitive data.

- Consumer-facing transparency that matches reality.

The Attorney General’s own words capture the lesson: businesses must “trust - but verify - that their privacy compliance measures work as intended”.

By following this roadmap, companies can prevent recurrence, safeguard consumer trust, and stay ahead of regulators in an era of heightened privacy enforcement.

| Violation (from Complaint) | What Went Wrong | Corrective Control (Non-Recurrence Measure) |

|---|---|---|

| Broken Opt-Out Mechanisms (opt-out form, GPC, cookie banner failed; 118 cookies still fired) | Opt-out systems were deployed but never validated; consumers’ choices ignored. | - Implement automated daily testing that simulates “triple opt-outs” (form, GPC, banner) and confirms no trackers fire.- Assign privacy engineers to run end-to-end QA scripts using browser dev tools and proxy monitoring.- Require quarterly independent audits of opt-out functionality. |

| Sharing Sensitive Article Titles (“Newly Diagnosed with HIV?” transmitted to ad partners) | Full article titles - which strongly suggested medical diagnoses - were sent downstream to advertisers. | - Apply data minimization: strip article titles and replace with general categories (“digestive health” instead of “Crohn’s disease”).- Enforce purpose limitation by ensuring health-related metadata is never used for cross-context advertising.- Conduct Privacy Impact Assessments (PIAs) before launching new trackers or content partnerships. |

| Weak Advertising Contracts (clauses allowing “any business purpose” or “internal use”; failure to bind vendors to U.S. Privacy String) | Contracts lacked CCPA-required terms (“limited and specified purposes”); vendors could broadly use or resell data. | - Rewrite contracts to include specific, CCPA-mandated terms: limited purpose, prohibition of resale, deletion requirements.- Explicitly require vendors to honor U.S. Privacy String and Global Privacy Control (GPC).- Establish vendor attestation process: annual compliance certifications + random audits. |

| Misleading Cookie Banner (banner claimed consumers could uncheck advertising cookies, but cookies still fired) | Interface gave a false impression of control; regulators considered it deceptive. | - Deploy real-time QA scripts that verify cookie banner settings apply across browsers and devices.- Remove or reword consent options unless they actually function.- Conduct UX compliance testing to detect “dark patterns” and misleading language. |

| Over-Reliance on Vendors (privacy compliance vendor failed to block trackers after opt-outs) | Healthline assumed vendors’ tools worked but never confirmed outcomes. | - Create vendor oversight program with monthly data-flow checks.- Require vendors to provide evidence of opt-out enforcement (not just policy statements).- Build internal capacity: don’t outsource final accountability for privacy compliance. |

| Failure to Recognize Sensitive Nature of Health Data (sharing diagnosis-related page titles without disclosure) | Consumers reasonably did not expect their potential diagnoses to be shared; regulators invoked purpose limitation principle. | - Apply sensitivity classification: flag health, financial, or children’s data for heightened controls.- Disallow invisible sharing of sensitive data even if buried in privacy policies.- Train teams on consumer expectation analysis: test whether disclosures align with what an average consumer would expect. |